There’s a famous thought experiment called the Infinite Monkey Theorem.

Put an infinite number of monkeys in an infinite room with an infinite number of typewriters. Give them infinite time. Eventually—guaranteed—one of them will produce the complete works of Shakespeare.

Infinite monkeys teaches two surprising lessons:

Infinity is a cheat code: brute force + time = guaranteed outcome.

Extraordinary outcomes don’t require extraordinary intelligence if you can run enough attempts and keep the good ones.

Now replace “monkeys” with coding agents. Most developers today are running the constrained version of the experiment:

One monkey, one typewriter, one room.

You open Cursor (or any AI editor), you prompt a single agent, and then you do the classic routine: yell at the monkey until something valuable falls out.

And to be clear: this monkey is not dumb. Thanks to modern frontier models, it’s often closer to a very smart intern than an animal smashing keys.

But you’re still bottlenecked by the same constraint: your attention is glued to one linear thread. One attempt at a time. One branch at a time. One “try again” at a time.

If your goal is Shakespeare, that workflow is leaving a ridiculous amount of leverage on the table.

Battle Royale for agents

A simple version looks like this:

Give the same task to N agents (sometimes same model, sometimes different).

Let them work in parallel.

Compare the outputs.

Merge the best one.

At that point, you’re not “pair programming with a model” anymore. You’re judging a tiny tournament.

Hypothetically, if an agent has even a 10% chance of one-shotting a task, the probability that at least one agent succeeds after N attempts is pretty high.

That’s the Infinite Monkeys insight: you don’t need perfection—you need volume + selection pressure.

But there’s a catch.

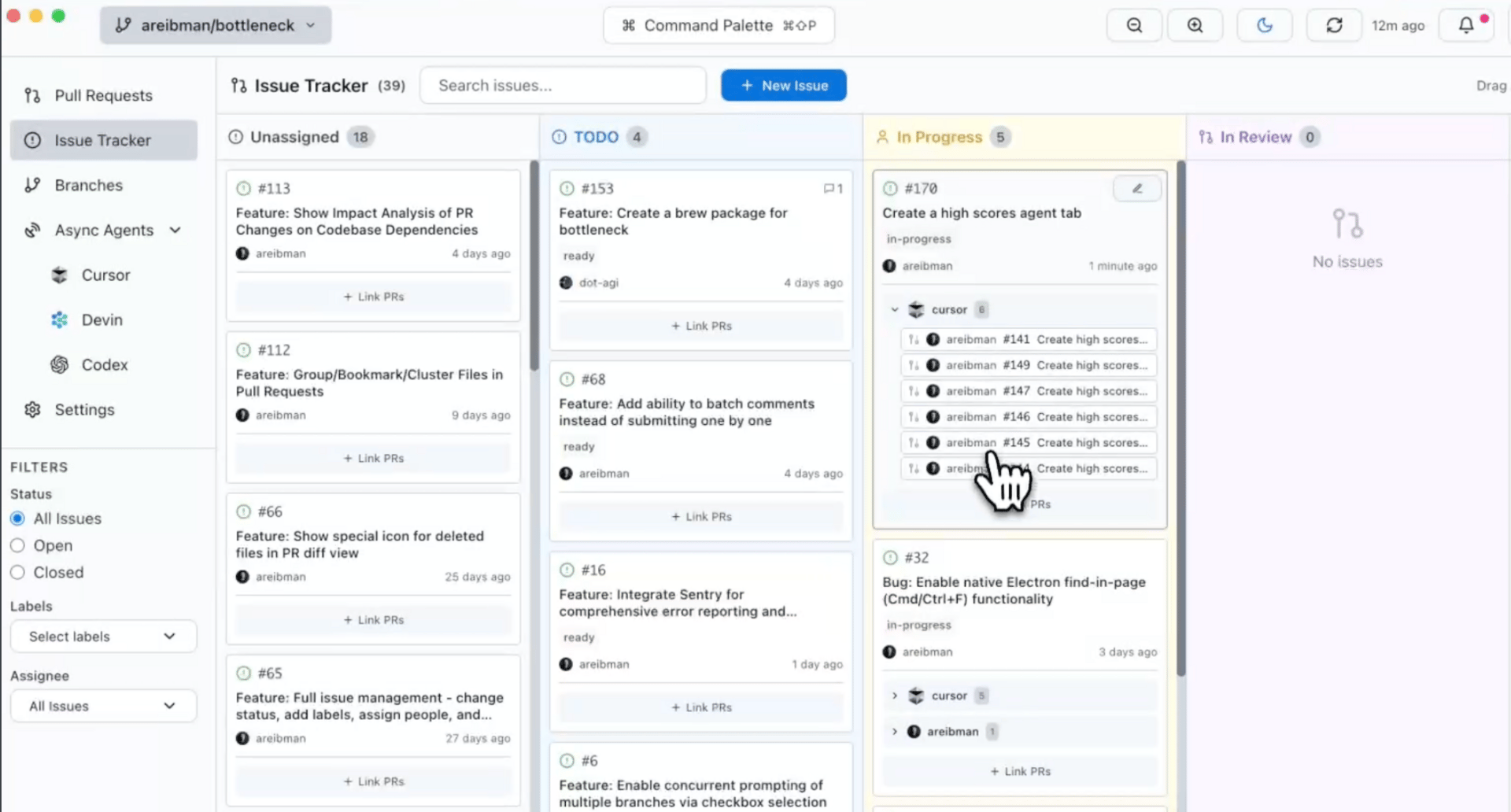

Running multiple parallel agents with Cursor

The real bottleneck is not generation. It’s verification.

If you spawn 40 agents and you have to manually read, run, and sanity-check everything they do. You haven’t created leverage.

You’ve created monkey slop at scale.

The hyper engineering strategy only works if you also build the post-filter: the process that tells you which outputs are worth your attention. For example, tools like Bottleneck make it easy to compare pull request diffs from different agents addressing the same issue. (And the app was itself created in very short order using the best-of-N approach).

Managing multiple agents in parallel

Simon Willison has been hammering this point from the “vibe coding” angle: “Your job is to deliver code you have proven to work”

In other words: your job isn’t to generate more code.

Your job is to tighten the definition of “works” so verification becomes cheap.

Step 1: Define “Shakespeare”

Screenshot from a GitHub PR’s CI checks (not AI, found on google images)

The monkeys theorem hides the hardest part: what counts as “the complete works of Shakespeare”?

Exact match? Formatting included? Typos allowed? Different language? Same plot but different words?

Software is the same. If you can’t crisply define success, you can’t select winners.

So define success as exit conditions:

Unit tests / integration tests/ end-to-end testing (i.e. Cypress, Playwright)

Linting + type checks + accessibility checks

“no regression” snapshots

The agent doesn’t decide when it’s done. The tests do.

“Let’s say you can’t write a 10/10 [poem], but you can decide when something is a 10. That might be all that we need.”

Furthermore, instruct your agents to produce tangible proof of success. This may include screenshots, video recordings, or replay files.

Step 2: Focus, Isolation, Tooling

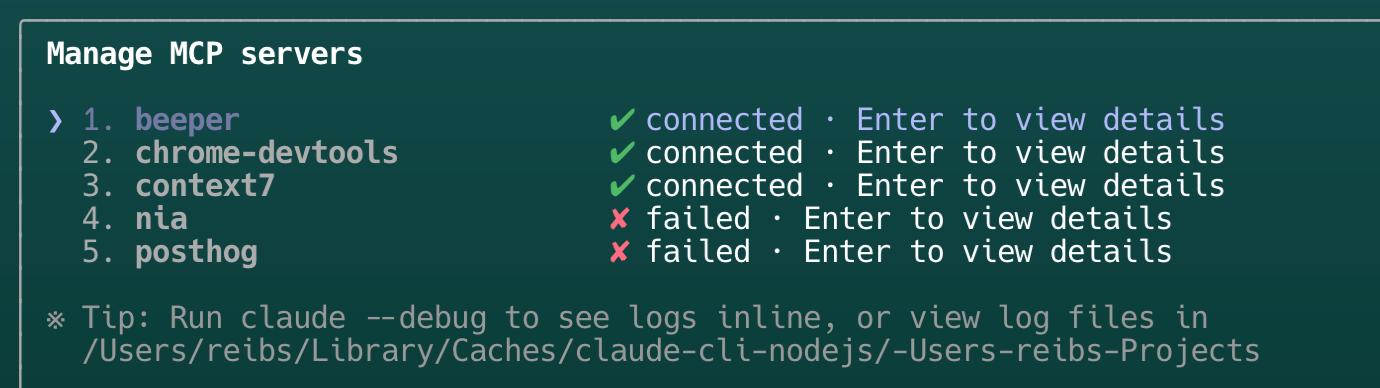

MCPs I’ve found to be immensely helpful in Claude Code

Parallel agents are powerful, but they’ll happily:

refactor unrelated files

invent abstractions nobody asked for

“improve” your architecture mid-task

Constrain them.

Don’t overcomplicate the typewriter. The tighter the box, the higher the hit rate, the easier the review. Practical constraints:

Scope: Limit which files your agents can access

Interfaces: Restrict agents to using only specific tools and docs

Budget: Auto-exit after N minutes of execution.

But also don’t be afraid to give your agents the tools (usually MCPs) they need to be successful. Modern agents get meaningfully better when they can:

search docs

read logs/traces

use a browser

execute test suites

run and install CLI tools

check devtools/profilers

Loading the right MCPs/skills turns the typewriter into a proper workstation.

Step 3: Make sandboxes truly isolated (or parallelism collapses)

Keep your monkeys focused and isolated

If agents share state, you get chaos:

port collisions + shared caches

race conditions on local resources

“works on my machine” times ten

The hyper-engineering ideal is simple:

Each agent gets its own environment (its own “laptop”), the way human engineers do.

Isolation is not a nice-to-have. It’s the difference between parallel exploration and self-inflicted interference.

Selection is the product

A real “best-of-N hyper-engineering” system should feel like:

spawn 20 attempts

automatic scoring (tests/linters/benchmarks)

ranked outputs

diffs side-by-side

merge the winner

store the eval results so you learn which prompts/models worked

Because once you do that, you unlock the second-order benefit:

You’re not just shipping faster—you’re generating training signal.

Every time a human picks the winning agent output, you’ve created a clean preference signal: this was better than that. That’s the raw material of better evals and, eventually, better agent behavior.

You’re probably not spending enough on agents.

In 2026, intelligence is still shockingly cheap relative to output. That’s why Hyper Engineers spend upwards of $18,000 per month on agents

Just another day in the Hyper Engineering twitter group chat (DM me if you also spend a ton)

If you’re treating agents like a copilot you occasionally consult, you’re playing small.

The correct mental model is: these are junior collaborators you can scale horizontally—as long as you build the verification and selection machinery.

You don’t need one agent that can reliably write a perfect 10/10 solution every time. You need:

multiple shots on goal

a system that can reliably recognize a 10 when it appears.

Hyper engineering isn’t “AI writes my code.” It’s building a system that lets you reliably and consistently ship AI code into production at high volume.

Or, in monkey terms:

Don’t sit in a room arguing with a monkey.

—

Want to read more? Consider subscribing.